The Complete Data Annotation Services Guide to Tools, Quality, Security, and Scale

Summarize with:

Data annotation services are often seen as a tedious, behind-the-scenes job in AI. While everyone obsesses over algorithms and architectures, annotation quality quietly determines whether your AI rises or falls flat. It is like building a race car…the engine gets the attention, but you can not get around the first bend without excellent tires.

But the question is, how do you start at all? Selecting the proper annotation tool is not a piece of cake. Then there’s the tricky question of measuring quality beyond simple accuracy scores. And what about inherent biases lurking in your data? How do you find the best annotation types to accelerate specific model performance improvements? Over the years, we have developed a few frameworks to tackle these questions.

Let’s dive into ten crucial aspects of data annotation, from choosing the right tools to ensuring data security, and we can avoid a few of those costly mistakes together.

Table of Contents:

- What is Data Annotation & Labeling?

- How Does Data Annotation and Labeling Enhance Workflows?

- Role of Data Annotation Services and Labeling in Industries

- Which Annotation Tool Best Fits Your Model and Budget?

- How to Scale AI Data Annotation Efficiently?

- In-house Versus Outsourced AI Data Annotation Strategy – What’s Best for You?

- Mitigating AI Data Annotation Bias and Ethics

- What Innovative Strategies Combat Inherent Annotation Bias in Datasets?

- Future of Data Annotation Services and Labeling

- The Bottom Line

What is Data Annotation & Labeling?

The driving force behind AI and ML technologies is the crucial amount of data they operate on. And the strategic extraction of this data can reveal innovative insights, stories, and new ideas to enhance business models. For the raw data to aid in research, it must be annotated and labeled.

- Data annotation refers to labeling random text, videos, and images to organize the raw data.

- Data labeling refers to the more detailed labeling of texts, images, and videos for analyzing the raw data effectively.

Business giants, research organizations, and healthcare sectors depend heavily on data utilization to produce innovative solutions to targeted concerns.

How Does Data Annotation and Labeling Enhance Workflows?

The various industry adoptions of data annotations and labeling, and how they make workflows efficient, are as follows:

- eCommerce: to improve customer experiences and personalization

- Research sectors: demand for high-quality data annotations to support AI-powered innovations

- Banking, Insurance, and Finance Industries: document-based processes, customer interactions, and digital services

- Social Networking Sites: content identification and monitoring, assessing emotions, and harmful content detection

- Agriculture Sector: disease detection, predicting yield estimations, and growth stages

Role of Data Annotation Services and Labeling in Industries

The reasons for the rise in demand for data annotation services and labeling in the market are:

- Enhanced Customer Services: Data annotation provides companies with customer journey patterns and behavior analysis, which helps personalize the eCommerce websites for a satisfactory customer experience.

- Survive in a Competitive Market: When businesses use data annotations to form efficient strategies for more innovations and operations, they stay ahead.

- High-Quality Data: When your data annotations and labeling are of high quality, the machine learning models can produce better outputs for more informed decisions.

- Efficiency and Enhanced Work Output: With data already annotated and labeled, the manual workforce can take a break from redundant data work and move towards more complex tasks, increasing the overall output and efficiency of the team.

- Education and Institutes: For high-level research and papers by academia, the institutes entertain a huge influx of annotated data to provide accurate information in research and experimentation.

The data annotation industry is in high demand to power AI-based programs that are set to revolutionize workplaces, academia, and homes.

Which Annotation Tool Best Fits Your Model and Budget?

Choosing annotation tools feels like dating, where every prospect looks nice in their profile, but compatibility is what counts. One retailing client learned this after buying enterprise annotation software that their team refused to use. Too complex for simple bounding boxes, and too rigid for their workflow. Million-dollar shelf decoration!

The tool landscape is divided into three camps:

- General-purpose platforms

- Specialized solutions

- Build-your-own adventures.

General platforms like Labelbox or V7 work well if your needs are standard. Need to label cats and dogs? Perfect. Need to annotate medical imaging with 47 different tissue types? Maybe not.

Smart money looks at the total annotation cost, not just the tool cost. If a pricier tool doubles an annotator’s productivity, it pays for itself quickly. Tool selection should start with a pilot. Not a demo. Real pilot with real data and real annotators. One healthcare AI team ran pilots for weeks with three tools. The winner didn’t have the most features or the best price. It was the one where annotators actually smiled while working. Remember, happy annotators make quality annotations.

In short, match tool complexity to task complexity. Simple tasks need simple tools. Complex tasks need specialized ones. And always, always pilot with your actual team. The best tool is the one your people will actually use effectively.

How to Scale AI Data Annotation Efficiently?

Scaling up AI data annotation isn’t as easy as hiring more people. Anyone who tells you there’s a quick fix presumably hasn’t spent hours sorting through confusing data. The main mistake? Assuming further evaluators will magically break quality issues.

You have to start with solid medication and clear, well-written guidelines. However, if your instructions are unclear, effects get messy, especially as your platoon grows. It’s essential to keep perfecting your reflection companion as you go, with help from the evaluators themselves. They’re the ones who notice what’s missing or confusing.

To solve for scale, organizations are turning to pre-annotation tools, automated pipelines, and active learning. According to Springer research, active learning can help models achieve 95%+ of full-data performance by labeling just 20–24% of the dataset.

Active literacy is also precious. Let your model highlight exemplifications it finds delicate or cases where evaluators differ. There’s no need to spend time on easy cases when the tough bones are where you ameliorate quality.

Finally, remember the human side. Fatigue, inconsistency, and communication can make or break your project. Train your annotators well, but listen to their feedback. They’ll spot problems you might miss. Treat your team as partners, not just workers, and you’ll get better results. Ultimately, finding the right balance between automation and human judgment is key. And honestly, managing the human element is often the most challenging part.

In-house Versus Outsourced AI Data Annotation Strategy – What’s Best for You?

The debate about whether to keep AI data annotation in-house or outsource it comes down to key factors: trust, control, speed, and cost. If you handle everything internally, you keep sensitive data, like private designs or user information, within your team. This means your staff, who know your business well, can manage the details and context better. They’re invested in the project’s success, so they care about quality. But building an internal team is slow, often expensive, and, honestly, finding people willing to do repetitive annotation work can be tough. Boredom can lead to mistakes, which hurts data quality.

On the other hand, outsourcing offers speed and efficiency, great for straightforward tasks where instructions are clear. You can process a lot of data quickly this way. But it’s not entirely hands-off. You need to provide clear guidelines and check the results regularly. Sometimes, misunderstandings happen, and batches of work can be wrong, wasting time and resources. Also, security becomes a bigger concern when data leaves your organization. You need strong contracts and regular checks to protect your information.

So, what’s the best choice? There isn’t a one-size-fits-all answer. For complex or sensitive tasks that need constant feedback, in-house teams are usually safer. For high-volume, repetitive work, outsourcing can really help. Most companies use a mix of both, adapting as needed to handle the practical challenges of real-world AI projects.

Mitigating AI Data Annotation Bias and Ethics

Speaking of making AI data annotation less biased, one may tend to concentrate on algorithms or sophisticated models. However, to be honest, the greatest influences (as well as most effective solutions) originate with individuals. Human annotators are complex beings with personal life experiences, and without care, human biases can potentially get carried over into the data. Artificial intelligence is not learning to some optimum thing; it learns based on what people tag it as. Most annotators may share a background, and their perception of what is considered to be normal undoubtedly influences the thinking of the AI. As an example, a face expression may seem to be outward to one individual and sad to another. These disparities, however, are not small and become part of the AI itself.

This is why diversity is not enough for a team of annotators; it turns out to be a technical requirement. Diversity in terms of the cultures and experiences of the people you bring together will help identify the mistakes or blind spots that any individual group would otherwise miss.

Future-proofing AI is commonly mentioned in terms of model complexity or ethical regulations, yet the real source of innovation is the data itself and the way it is annotated. It is not a one-time job; rather, this is an ongoing dynamic process that entails a strong and flexible methodology.

1. Embracing Flexibility in Annotation Processes

A common pitfall is the initial setup of a dataset with what seem like perfect labels, only for them to become outdated as a project evolves. New product types or unforeseen user behaviors can render a meticulously annotated dataset inaccurate, slowing down innovation rather than accelerating it. To avoid this, we must build annotation processes with flexibility at their core. This means creating a system where you can easily update and add new labels, or even change category definitions without disrupting the entire dataset. A flexible annotation process allows the model to grow alongside the business, welcoming change instead of resisting it.

2. The Importance of Tools and a Human-Centered Approach

While tools are essential, no single proprietary solution can solve this challenge. It is crucial to have tools that are interoperable and easy to update, as getting locked into a rigid system can make future changes a nightmare.

Moreover, the people doing the annotation are a vital part of the process. Their feedback is invaluable for catching where the ideal system doesn’t align with the complexities of the real world. Acknowledging their role and keeping them in the loop is key to an agile and accurate annotation process. In fact, a study by Appen found that high-quality, human-annotated data can reduce AI model development time by up to 30%. This highlights the immense value of a human-in-the-loop approach.

3. Acknowledging the Unknowable

Finally, we must approach data annotation with humility. We don’t have all the answers for what tomorrow’s AI will need. The goal isn’t to build a perfect, rigid system, but to cultivate a process that can adapt and thrive over time. Just as a strong, flexible vine can grow and climb, a well-structured and adaptable annotation system can support innovation far into the future.

What Innovative Strategies Combat Inherent Annotation Bias in Datasets?

Now, let’s talk about annotation bias. It’s a sneaky beast. This project was where we built a model to detect skin cancer from images. It seemed straightforward. We had a huge dataset, meticulously labeled by dermatologists. However, while accurate on the test set, the model bombed entirely in the real world. Why?

Turns out, the dataset heavily over-represented fair-skinned individuals with clear, textbook examples of lesions. Real patients? A lot more diversity in skin tones, lighting, and the presentation of the disease. The model had learned to recognize “skin cancer on white skin under ideal conditions,” not, you know, actual skin cancer.

Confronting the problem of annotation bias requires a multifaceted approach that extends beyond superficial variance. These are the best practices other top-tier organizations employ to achieve a systematic bias reduction of their annotation workflows.

1. Adversarial Debiasing

Well, one promising approach is adversarial debiasing. It’s about training a secondary model to predict the annotator’s biases–things like their background, training, or even the equipment they used. Once you have that biased model, you can penalize the main task (like image classification) for relying on those biased features. It’s like saying, “Hey, model, stop thinking about the fact that all of these photos were done using the same camera!! It is the real disease you need to concentrate on!!”

2. Active Learning

Another thing that I’ve seen is active learning. Instead of passively accepting whatever data comes along, you actively select examples where the model is most uncertain. In these cases, where the annotation quality might be questionable or the data itself is ambiguous. Then, you get those examples re-annotated, maybe by a different group of annotators, or through a more rigorous process. It’s like being a detective, always looking for the weak points in the evidence.

3. Data Augmentation

Data augmentation is a good one, too. Augmentation helps when you can synthetically generate more data that balances out the bias. For example, if we are dealing with the skin cancer dataset above, we can alter the skin tone digitally to balance the dataset.

Of course, there’s no silver bullet. It’s a constant process of auditing the data, questioning assumptions, and experimenting with different techniques. Sometimes, it’s as simple as just having a more diverse team of annotators.

Future of Data Annotation Services and Labeling

According to the reports, the data annotation market will have a compound annual growth rate (CAGR) of 26.5% by 2030. The industry will play a major role in global technological advancements, with heavy demands in the AI-powered and healthcare sectors. Data annotation services are fundamental to training AI systems and machine learning projects for accurate outputs.

With 2024 being the year of Artificial Intelligence and more organizations globally depending on AI for efficient workflows, the data annotation market will thrive and expand with more technological advancements.

Here are a few examples of how data annotation will massively affect industries:

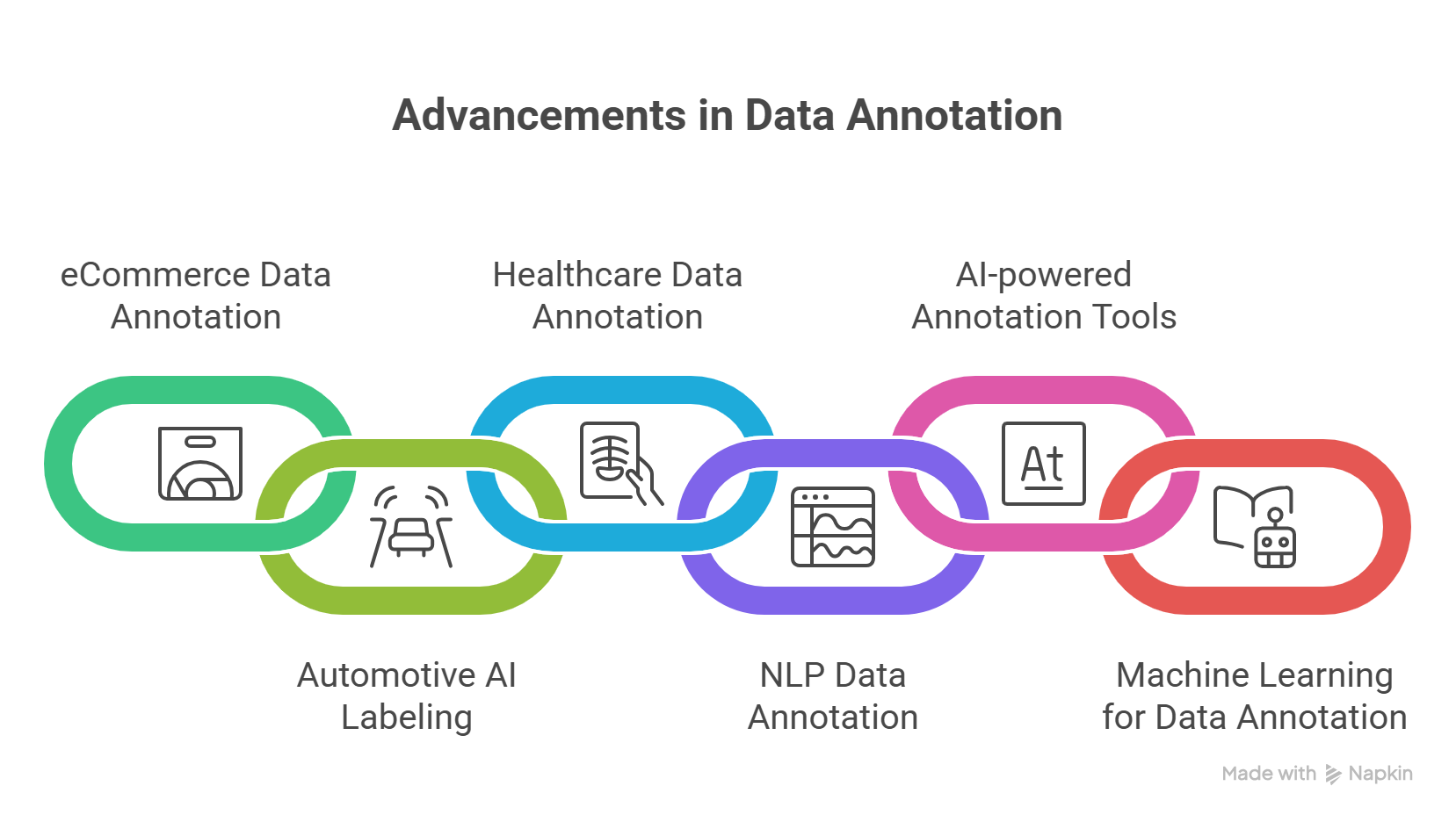

1. Surge of Data Annotation in eCommerce

The online eCommerce market depends heavily on high-quality data annotations to provide personalization to its customers.

For example, product recommendations, special offers, customized shipments, and unique suggestions are powered by analyzing annotated data that provides information on customer patterns. This process encourages customer engagement and drives more online sales.

The involvement of AI image labeling and annotation will massively drive eCommerce operations in the future.

2. AI Labeling in the Automotive Industry

Self-driving cars are powered by high-quality data annotations that back up AI functioning, enabling the AI to understand its surroundings, make basic decisions, and navigate without human intervention.

The perception systems depend on labeled images and videos to develop a reliable self-driving system that can maneuver independently. Automotive industries will rely heavily on data annotations to power their automated innovations and survive a competitive sector.

3. Data Annotations and Labeling in Healthcare

The healthcare sector is one of the major consumers of AI-driven medical technologies for enhancing healthcare training and workflows for doctors.

For example, medical procedures use AI-powered computer visions that use labeled medical images to identify possible injuries and provide patient reports. Additionally, AI systems can be used to scan MRIs, CT scans, and X-rays to detect any medical injuries or conditions.

This automation is based on the medical data annotations and labeling that teach AI systems how to read images.

4. Use In Natural Language Processing (NLP)

NLP applications depend on accurate data annotations to help them understand human language better. With the rise of NLP applications such as chatbots, voice applications, smart assistants, and sentiment analysis, accurate data annotation will be imperative for producing efficient NLP tools.

5. AI-powered Annotation Tools

With such a huge demand for data annotation services, relying only on a manual workforce for data annotation isn’t practical in the long run. Many organizations are integrating AI-powered data annotation tools to handle big data and save time.

6. Machine Learning for Data Annotation

Machine learning algorithms can further enhance the quality of data annotations and AI image labeling. This process produces high-quality data to deliver to organizations needing high-precision data.

For more output, the integration of advanced technologies in data annotation companies will be more effective in the future.

The Bottom Line

Anyone promising simple solutions to these complex challenges is probably mispelling. Success comes from accepting the messiness while building toward clarity. Start with solid data foundations. Add governance before you need it. Scale gradually. Measure what matters. Choose tools that fit your reality, not vendor visions.

Most importantly, remember that AI amplifies what you feed it. Good data practices become great AI outcomes, and bad habits become expensive failures. The organizations winning with AI aren’t the ones with the most significant budgets or fanciest tools. They’re the ones who treat data as a strategic asset and act accordingly.

At Hurix Digital, we’ve been helping organizations transform their messy data challenges into competitive advantages. We bring our two decades of experience to every project, whether you’re building educational AI models or scaling enterprise learning systems.

Let’s talk about how to make your data work as hard as you do. Your AI deserves better than garbage labels!

Summarize with:

Vice President – Content Transformation at HurixDigital, based in Chennai. With nearly 20 years in digital content, he leads large-scale transformation and accessibility initiatives. A frequent presenter (e.g., London Book Fair 2025), Gokulnath drives AI-powered publishing solutions and inclusive content strategies for global clients

A Space for Thoughtful

A Space for Thoughtful