Beyond ChatGPT: Advanced AI Tools Transforming Corporate Learning

Summarize with:

A year ago, senior executives were forwarding screenshot after screenshot of clever answers from a well-known chatbot, as if witty paragraphs equaled strategic progress. The novelty wave has crested. The serious question in boardrooms now: what sits beyond a general chat interface that actually upgrades workforce capability, trims waste, shrinks time to skill, and gives finance a line of sight between talent spend and operating leverage. The shift feels a little like early electrification. First came party tricks: light bulbs in lobbies. Real productivity only arrived when factories rewired entire production flows. L&D is at that wiring stage.

Inside firms, there is still a mismatch between optimism at the top and lived reality below. Our latest L&D Maturity Index report shows executives often overrate strategic alignment (C‑suite confidence far higher than practitioner confidence) while only a small minority (around 18%) operate at a “Pioneering” tier with data stitched into decision loops. That gap matters because superficial adoption (a chat window bolted on to stale modules) delivers shallow wins that fade, while deeper adoption (skill graphs, adaptive micro journeys, multimodal simulation, trustworthy analytics) compounds.

This piece unpacks ten blunt questions leadership teams are actually asking. Each section keeps an eye on credibility, examining what constitutes hype, what is quietly effective, where risk lies, how to sequence moves, and how to discuss return in a language a CFO respects. Tone matters. No magic wand claims. Some friction is healthy; a bit of skepticism forces precision. The focus phrase “AI in Corporate Learning” appears in search dashboards, yet internally, nobody searches for the phrase.

Forget the hype. What matters now is a plain toolkit: accurate info pulled when needed, smart helpers, realistic practice, up‑to‑date skill maps, and tracking that ties improvement to business results. That’s the practical layer we explore in this blog.

Table of Contents:

- Why Do Employees Fear AI Learning Systems Will Replace Human Development?

- How Can Organizations Demonstrate AI Enhances Rather Than Replaces Human Connection?

- Why Does “Beyond ChatGPT” Matter: What Gap Do Advanced AI Tools Fill in Corporate Learning?

- Which AI Architectures are Actually Moving the Needle in Enterprise L&D?

- How are Firms Turning Raw Skills Data into Action with AI Skill Graphs and Competency Intelligence?

- How Can Organizations Implement AI-Driven Content Curation Without Overwhelming Employees?

- Why are Intelligent Tutoring Systems Becoming Essential for Technical Skills Development?

- What Role Does Natural Language Processing Play in Personalized Learning Experiences?

- How Do Predictive Analytics Tools Help Identify Future Skill Gaps?

- How Can Virtual Reality Training Simulations Accelerate Complex Skill Development?

- How Do Automated Content Generation Tools Maintain Quality While Scaling Learning Materials?

- What Governance, Ethics, and Data Safeguards Keep AI-Powered L&D from Going Off the Rails?

- Moving Forward

Why Do Employees Fear AI Learning Systems Will Replace Human Development?

The fear of replacement runs through every conversation about AI in learning, though it rarely gets voiced directly in company meetings. Using advanced AI-powered tools like Dictera, instructional designers watch AI generate training content in minutes that once took weeks. They see chatbots answering learner questions with increasing sophistication. The logical conclusion seems obvious: if AI can teach, why do we need human teachers?

This fear reflects a misunderstanding of AI’s actual capabilities, but also a very real trend in how organizations position these tools. When companies emphasize cost savings and efficiency gains, employees hear “fewer humans needed.” When executives celebrate AI’s ability to “scale personalized learning,” trainers wonder where they fit in a world of algorithmic instruction.

The replacement anxiety intensifies because many learning professionals entered their field specifically for human connection. Creating engaging experiences, reading the room and adapting accordingly, and coaching learners during difficult skill development are roles that seem fundamentally human. Watching AI attempt these tasks feels like witnessing the automation of empathy itself.

Historical precedent fuels these concerns. Employees have watched automation transform manufacturing, customer service, and data processing. Each wave promised to “augment” human workers but often resulted in significant job displacement. Why should AI in learning be different? The burden of proof lies with organizations to demonstrate genuine augmentation rather than disguised replacement.

Reality proves more nuanced than either extreme position suggests. Learning tasks that AI excels at include content curation, progress tracking, and pattern recognition. But it struggles with context, emotional intelligence, and the messy realities of human development. The most effective implementations recognize this division of labor: AI handles the systematic and scalable, while humans manage the complex and contextual.

How Can Organizations Demonstrate AI Enhances Rather Than Replaces Human Connection?

Demonstrating enhancement over replacement requires more than words. It demands visible proof that AI strengthens rather than severs human connections in learning. Smart organizations create compelling examples that employees can see, touch, and experience themselves.

The key lies in reframing AI’s role from teacher to teaching assistant. When a global technology firm introduced AI-powered learning recommendations, they positioned it as “giving trainers superpowers” rather than replacing trainer judgment. Transparency about AI limitations builds trust. In organizations that understand cultural nuance, provide emotional support during career transitions, and inspire through personal experience, employees feel AI cannot replace their worth.

Creating collaborative workflows where AI and humans visibly work together demonstrates daily enhancement.

Why Does “Beyond ChatGPT” Matter: What Gap Do Advanced AI Tools Fill in Corporate Learning?

Executives have already watched a chatbot spit out neat summaries of policy PDFs. Useful, sure, but real working systems need more than long answers in a chat window. There are several gaps.

First, accuracy in context: generic models still make things up or flatten important details in areas like drug labeling or energy trading rules. Second, depth: a simple Q&A box doesn’t tie each question or response back to a specific skill, so leaders still can’t see who can actually do what. Third, it sits outside the real flow of work: people build capability inside code editors, sales tools, or design software, and a separate floating chat box quickly becomes something they open less and less. Finally, proof is weak: free text exchanges don’t create solid, auditable evidence that someone’s proficiency really improved. So the novelty is there, but the backbone needed for serious capability building is missing.

Consider compliance. Standard eLearning (keeping “learning” count low here) poured static slides into modules. A beyond-chat approach builds a policy reasoning coach that ingests updated regulations nightly, asks employees to reverse-explain clauses in their own words, flags semantic gaps to a manager’s dashboard, and pushes only delta micro-content next quarter. Time in seat falls, measured retention rises, and audit defensibility strengthens because each interaction is timestamped, source‑linked, and skill‑tagged.

The L&D Maturity Index report underscores that maturity correlates with analytics depth. Firms stuck at ad‑hoc stages mistake access to a chat model for transformation; higher tiers instrument behavior, feedback loops, and outcome linkage. The “beyond” conversation reframes the stack from a curiosity tool to an adaptive capability system.

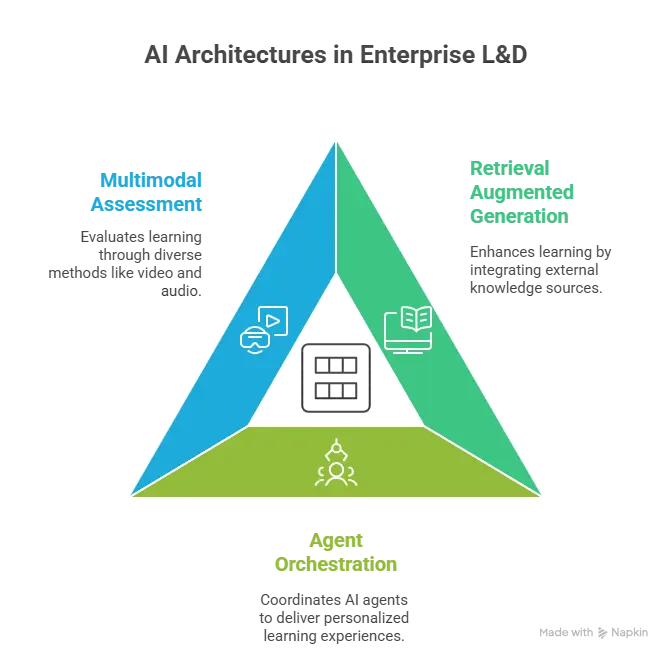

Which AI Architectures are Actually Moving the Needle in Enterprise L&D?

Buzzword bingo can blur the signal. Under the hood, a handful of architectures keep surfacing in environments where business impact shows up:

- Retrieval Augmented Generation (RAG): Instead of a model inventing answers, it retrieves curated internal fragments (tribal wiki notes, code snippets, etc), then composes an answer with citations.

- Agent Orchestration: Nested agents handle sub-tasks. One agent parses a skill gap, another assembles a schedule, a third evaluates practice artifacts, and a fourth escalates to a coach. Why it helps: decomposition yields more consistent progress tracking than monolithic chat.

- Multimodal Assessment: Simply put, this is a vision, text, and audio pipeline score, for example, a sales role-play video, measuring tone, pace, and objection-handling patterns. Gains: richer proficiency evidence without managers sitting through dozens of live sessions.

Stack layering matters. RAG supplies grounded content. But it’s the co‑pilot who surfaces it contextually. An agent tracks progress across tasks. The skill graph updates probabilities of readiness for the target role. Telemetry flows outward to analytics that correlate completion with performance KPIs (reduced defect rate, faster deal cycle).

L&D Maturity Index report findings show “Pioneering” orgs integrate analytics and adaptive experience layers; laggards bolt disparate tools without data coherence. Architecture maturity involves convergence: fewer silos, more shared taxonomies. Effective design teams now sketch “data lineage diagrams” for capability the same way engineers diagram microservices. That shift from tool sprawl toward composable architecture is where the real movement is occurring.

How are Firms Turning Raw Skills Data into Action with AI Skill Graphs and Competency Intelligence?

People used to say “skills data” and just meant a spreadsheet showing who finished which courses. Now, better systems treat ability as a living map. In this map, each skill is represented by a dot, and related skills are connected by lines. Every line indicates the strength of support between two skills or the order in which one skill is required to precede another. Each skill dot collects proof: quiz or test scores, real project samples, manager thumbs‑ups, and real work signals like code quality. All of that evidence is weighted, so the picture updates as people do more real work, not just as they click through classes.

Why use a graph? Linear taxonomies freeze reality. A graph captures lateral moves. Example: a cloud engineer strong in Kubernetes orchestration and policy compliance is, with modest domain coaching, a viable candidate for platform governance roles. The graph can surface that hidden pathway faster than a manager’s gut.

Actionability emerges when the graph is not a static dashboard but a living driver of interventions. For succession planning, talent teams query: “List internal candidates who can reach Data Product Owner readiness by Q4 with fewer than 60 hours of development.” The engine returns names with path projections, required practice modules, and potential mentors. Finance gains forecast capacity instead of reacting to external hiring delays.

There is a risk of false precision. Overfitting to syntactic similarity can misclassify nuanced competencies (regulatory pharmacovigilance vs. general data quality). Human review loops on higher-stakes career decisions remain vital. Healthy programs publish transparency notes on which signals drive which readiness score, recency decay parameters, and handling of sparse evidence.

Using the graph for measurement flips the narrative from seat time to outcome. Instead of saying “1,000 employees finished security module,” the dashboard reports “Privileged access misconfigurations dropped 32 percent after targeted micro interventions to cohorts with low identity policy proficiency score.” That reframes L&D credibility.

Maturity-wise, Pioneers in the L&D Maturity Index report embed skill graph outputs into workforce planning cycles, not sidecar dashboards. The intelligence sits inside quarterly business reviews, influencing product roadmap feasibility assessments. That is competency data in action, rather than ornamental analytics.

How Can Organizations Implement AI-Driven Content Curation Without Overwhelming Employees?

Content overload has become the modern workplace’s biggest learning killer. It’s no wonder employees feel paralyzed by choice when companies subscribe to multiple platforms, create internal resources, and encourage knowledge sharing. The average knowledge worker spends 2.5 hours daily searching for information they need. That’s not learning; it’s digital archaeology.

Smart content curation AI acts like a highly skilled librarian who knows every employee personally. These systems analyze job roles, past learning behavior, current projects, and even calendar entries to surface exactly what someone needs, exactly when they need it. But here’s where most implementations fail: they try to do too much too fast. Successful organizations start small. They pick one critical skill area or department and perfect the curation experience there before expanding.

The key lies in progressive disclosure. Rather than showing employees hundreds of available resources, AI surfaces three to five highly relevant options. Think Netflix recommendations versus a library catalog. One invites exploration; the other induces paralysis. The system learns the preferences of employees as they engage with content, such as format, difficulty level, and optimal length of sessions.

Why are Intelligent Tutoring Systems Becoming Essential for Technical Skills Development?

Technical skills have a half-life problem. What you learned about cloud architecture two years ago might be partially obsolete today. Programming languages evolve, security protocols change, and regulatory requirements shift. But with this acceleration, traditional training methods like workshops, certification programs, and recorded tutorials cannot keep up.

Intelligent Tutoring Systems (ITS) address this by creating personalized, adaptive learning experiences that evolve in tandem with both the technology and the learner. Unlike static courses, these systems understand not just what someone knows but also how they learn best. They recognize when a developer struggles with object-oriented concepts rather than syntax issues and adjust their teaching approach accordingly.

The magic happens through continuous assessment and adjustment. As an employee works through coding exercises, the ITS analyzes their approach, not just their answers. It notices if someone consistently makes similar logical errors or if they understand concepts but struggle with implementation. Based on these patterns, it adjusts difficulty, provides targeted hints, and suggests alternative learning resources.

Cost savings with ITS are substantial but secondary to quality improvements. Traditional technical training often requires taking experts offline to teach, creating productivity losses beyond training costs. ITS allows experts to codify their knowledge once, then lets the system handle delivery and basic questions. Experts only intervene for complex issues or strategic discussions.

What Role Does Natural Language Processing Play in Personalized Learning Experiences?

Natural Language Processing (NLP) has quietly become the backbone of genuinely personalized corporate learning. The real breakthrough is AI understanding what we mean, what we’re struggling with, and what we need to hear to finally understand difficult concepts.

Consider how most employees actually learn at work. They “Google” problems, ask colleagues questions, and piece together understanding from multiple sources. NLP-powered learning systems replicate this natural process but with infinite patience and perfect memory. An employee can ask the same question sixteen different ways until something clicks, without judgment or frustration.

Modern NLP analyzes the language employees use when discussing challenges, identifies conceptual misunderstandings, and adjusts explanations accordingly. When someone says, “I don’t get why we need microservices,” the system recognizes this isn’t really about microservices—it’s about understanding architectural trade-offs and business value. It responds with business-focused examples rather than technical definitions.

Writing analysis has become particularly powerful for leadership development. NLP systems analyze emails, reports, and presentations to identify communication patterns that might limit career growth. They spot passive voice overuse, unclear arguments, or tones that might alienate colleagues. But instead of generic writing tips, they provide specific, contextual coaching. “Your status updates focus heavily on problems without solutions. Try this structure instead…”

Sentiment analysis adds another layer of personalization. The system recognizes frustration, confusion, or boredom in written responses and adjusts accordingly. If someone’s answers become increasingly terse, it might suggest a break, switch to a different format, or provide encouragement. This emotional intelligence makes digital learning feel surprisingly human.

How Do Predictive Analytics Tools Help Identify Future Skill Gaps?

Most companies discover skill gaps when it’s too late—when projects fail, deadlines slip, or competitors leap ahead with capabilities. Predictive analytics flips this reactive scramble into proactive preparation. However, the gap between having predictive tools and actually using them remains wide.

These systems work by analyzing patterns across multiple data streams. They examine job postings in your industry, patent filings, academic research, technology adoption curves, and regulatory changes. Then they map these external trends against your internal capabilities, identifying gaps before they become critical. The analysis goes beyond simple trend-spotting. Sophisticated tools understand skill adjacency and evolution paths. They recognize that someone with strong SQL skills can more easily transition to Python for data analysis than someone without database experience. This mapping helps organizations plan realistic reskilling programs rather than hoping for miraculous transformations.

Internal data provides equally valuable insights. By analyzing project outcomes, performance reviews, and collaboration patterns, predictive tools identify which skills actually drive success in your specific context. Sometimes the results surprise everyone.

The warning signs emerge months or years before a crisis hits. Predictive analytics might notice that your senior Java developers are aging toward retirement, while younger employees favor Python. It calculates how long you have before institutional knowledge walks out the door and recommends specific knowledge transfer and upskilling initiatives. This foresight transforms panicked hiring sprees into thoughtful capability building.

How Can Virtual Reality Training Simulations Accelerate Complex Skill Development?

Virtual Reality (VR) training has moved past the gimmick phase into serious business tool territory. The technology now delivers experiences so realistic that the brain responds as if they’re actually happening. This psychological reality makes VR particularly powerful for high-stakes training where mistakes in real life could be catastrophic or expensive.

The acceleration comes from safe failure. Employees can make mistakes, even catastrophic ones, and immediately see consequences without real-world damage. A chemical plant operator can trigger a virtual explosion, watch the devastation unfold, then rewind and try again. This freedom to fail removes the anxiety that inhibits learning in real-world training scenarios.

Muscle memory development happens faster in VR than in traditional training. Studies show that people trained in VR complete tasks 4x faster than classroom-trained peers and 2x faster than eLearners. The combination of visual, auditory, and kinesthetic engagement creates deeper neural pathways. Emotional and social skills benefit enormously from VR’s immersive nature. Customer service representatives practice handling angry customers who feel genuinely intimidating. Managers navigate difficult conversations with virtual employees who exhibit realistic emotional responses. These scenarios would be impossible or unethical to create with real people, but are essential for skill development.

How Do Automated Content Generation Tools Maintain Quality While Scaling Learning Materials?

The content creation bottleneck has plagued corporate learning forever. Subject matter experts are too busy to create training, and professional instructional designers are expensive and slow. By the time content gets created, reviewed, and approved, it’s often outdated. Automated content generation promises to solve this, but quality concerns make organizations hesitate.

Quality control happens through layered validation. AI generates initial content based on templates and best practices. Then it runs automated quality checks for readability, completeness, and alignment with learning objectives. Human experts review for accuracy and nuance. Finally, learner feedback continuously improves the content. This multi-stage process produces content that often exceeds human-created materials in consistency and instructional effectiveness.

Version control and updates become manageable at scale. When regulations change or products update, AI can automatically identify and revise affected content across hundreds of courses. What once required months of manual review happens in hours.

Localization and accessibility happen automatically. Content generates simultaneously in multiple languages with cultural adaptations. Screen reader compatibility, captions, and alternative formats are created by default rather than expensive afterthoughts. As a result of this inclusive approach, the audience that can benefit from training materials is dramatically expanded.

What Governance, Ethics, and Data Safeguards Keep AI-Powered L&D from Going Off the Rails?

Trust is oxygen. Lose it, and adoption stalls. Governance spans policy, technical controls, ethical review, transparency, and incident response.

Here are the parts that actually keep trust intact

- Data Minimization: Ingest only signals with clear value. Calendar scraping or full chat transcript mining rarely survives a cost‑benefit ethics test.

- Consent & Notice: Clear language: what data, for what outcome, retention period, opt‑out pathways.

- Privacy Protection: Pseudonymize where feasible. Encrypt at rest and in transit. Mask personally sensitive fields before retrieval embedding to avoid leakage.

- Model Risk Management: Maintain model cards detailing training data scope (if custom), limitations, evaluation results (bias, hallucination rate), and update cadence.

- Human Oversight: High-stakes decisions (promotion readiness, performance remediation) require human review of AI summaries. The system should cite underlying evidence, enabling contestability.

- Bias Mitigation: Periodic audits comparing readiness score distributions across demographic groups (where lawful to monitor). If disparities arise, trace the feature contributions and retrain or adjust the weighting.

- Incident Response: Run tabletop exercises (model outputs outdated safety protocol; misclassification of competency). Document detection, containment, communication, and remediation steps.

Ethical nuance: Over-personalization may inadvertently infer medical, union, or other protected statuses via pattern recognition. Guard against unintended inference logging. Provide “Why am I seeing this recommendation?” explanations. Permit corrections: “I no longer perform task X.”

Keep the oversight group mixed: people from learning, security, legal, privacy, diversity and inclusion, plus a few frontline managers. Meet every month at the start, then shift to quarterly once things settle. Inside the company, share a simple page that lists which models are running, how they’re being checked, recent changes, and an easy way to reach the team with questions.

Moving Forward

The path ahead requires more than better algorithms or stricter policies. It demands fundamental shifts in how organizations think about development, technology, and human dignity.

Organizations serious about ethical AI in L&D start with harder questions. Not “how can we optimize learning?” but “what kind of workplace are we creating?” Not “what does the data suggest?” but “whom might this harm?” Not “is this legal?” but “is this right?”

The competitive advantage might surprise skeptics. Companies that prioritize ethics in their AI learning systems report higher engagement, greater innovation, and stronger retention. Employees trust organizations that demonstrate genuine concern for their development beyond productivity metrics. They invest themselves in companies that see them as humans to develop, not resources to optimize.

Ethics is a practice, not a destination. Each new capability raises fresh questions. Every optimization creates potential for discrimination. All efficiency gains risk human costs. Organizations that acknowledge this ongoing tension navigate it better than those claiming to have solved it.

The future of ethical AI in L&D belongs to organizations that prioritize people alongside technology. Partner with Hurix Digital for trusted workforce learning solutions that put your employees first. Explore how we can help by contacting us today.

Summarize with:

Chief Learning & Innovation Officer –

Learning Strategy & Design at Hurix Digital, with 20+ years in instructional design and digital learning. She leads AI‑driven, evidence-based learning solutions across K‑12, higher ed, and corporate sectors. A thought leader and speaker at events like Learning Dev Camp and SXSW EDU

A Space for Thoughtful

A Space for Thoughtful